Data Quality – Catching Rule Violations

Introduction

This is my third installment of blog articles about Data Quality. In the first article, I postulated that data has quality when it has an acceptable level of errors. The goal of course is no errors, but that might be too expensive to insure. Data governance, master data management (MDM), or another data architecture team charged with the governance of corporate data, needs to, among other things:

- assess and monetize the cost of errors vs. the cost of detection;

- define and limit data values within the information context; and,

- employ the right tools and techniques to find errors or suspicious values.

In the previous article, we demonstrated some ways to catch errors that appear wrong on their face. Without knowing the application these data just looked wrong; e.g. alpha characters in a numeric field, impossible dates, missing special characters, and so forth. In this article, we show how to find errors in data that looks right, but contains values outside of an acceptable range for a specific application. This would take the forms of membership: 1) numerical values within a range; or, 2) discrete values that should or should not be present.

Planning

After finding real or possible errors, an additional responsibility is to discover the correct value and insert it into the data store. If that is not possible, those managing the data must report to the application groups about specific data that is not to be trusted. Before programming begins, there should exist an information diagram that associates data names with possible values, or, an acceptable range of values for each datum. Having rules provides two benefits to the organization:

- a central repository of data names and rules for the data’s format and use;

- a programmatic repository for the error-checking-software to follow.

The construction of this information requires knowledge and agreement of users about shared data. This rules library could be the only place where such knowledge is retained. The obvious place to begin is with errors that have been previously discovered. There would be no excuse for allowing the same error twice. Woe to the manager who discover errors after the production job is run when such errors could have been discovered through pre-processing. Then add the errors that might be anticipated.

Programming

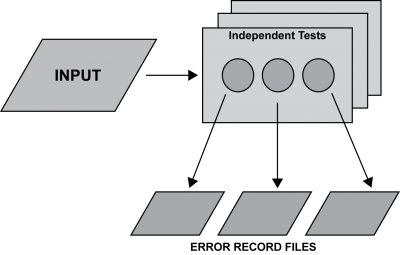

Now comes the technical work of actually writing and running the data checking programs. This work is never finished because database contents are always changing, and sometimes the rules do, too. These programs must run in this kind of dynamic data environment, and whenever there are available machine cycles. The flow chart looks like this:

How IRI Does It

In the CoSort data management package — as well as its spin-off products for data migration, masking, and generation — IRI uses a 4th generation language (4GL) called SortCL to define data layouts and manipulations. SortCL has constructs for searching files and tables, testing for conditions, combining transformations, and reporting to multiple targets.

SortCL jobs are defined in scripts written by hand or automatically generated by GUI wizards in an Eclipse-based IDE. The GUI, called IRI Workbench, also features online data discovery and metadata definition, along with job design, execution, and management systems. What makes the language and GUI particularly useful in finding errors is their ability to read from multiple data sources at once, apply mapping rules, and direct output to multiple targets.

Each target can have its own associated conditions, formats and contents. Whether or not a record contains an error, or is suspicious, its evaluation occurs through tests defined and assigned for each output. Time-wise, the output files or tables are typically small; incremental time differences as we specify additional target conditions are negligible.

In the following example, we are reading a Payroll file. In practice, the table would have thousands of entries and it would be too difficult to catch errors on sight. To automate error-checking, we have created look-up tables, or SET files, that contain only correct values for several fields. We also defined certain rules about jobs and ages. Finally, we inserted errors into the payroll to see if the program catches errors in membership (i.e. values out of range), and other rule violations.

Payroll

Benny, Jack M 39 Comedian 88900 Black, Robert M 54 JVP 25600 Carson, Johnie M 68 Sculpter 67820 Einstein, Al M 124 Genius 55000 Gleason, Jackie M 44 Hustler 45600 Green, Harry M 102 Guard 31500 Grey, Damson F 44 Clerk 101000 Heston, Charles M 44 Actor 45980 james, Henry M 38 Groom 56789 Hoffa, Jimmy M 66 Pilot 67000 Jones, John M 37 Clerk 37000 Perry, Jane F 56 Guard 98772 Shapiro, Edie F 45 Welder 42123 Smith, Mary F 42 CPA 62000 Stander, Gary M 55 Guard 43567 White, Adam M 45 VP 126000 White, Mary F 06 Clerk 45478

Good_Users.set * Legitimate_Jobs.set

Black, Robert Acct Clerk 35000 Carson, Kit CPA 45000 Einstein, Al Lab Guard 18500 Gleason, Jackie JVP 42500 Green, Harry Product Keeper 57000 Grey, Damson Guard Genius 34567 Heston, Charles Lot Pilot 75000 james, Henry Stable VP 99000 Jones, John Acct Welder 34500 Perry, Jane Front Shapiro, Edie Shop Smith, Mary Acct Stander, Gary Factory White, Adam Office

CoSort SortCL Error Checking Script

/INFILE = Payroll # source file metadata /FIELD = (User, POSITION = 01, SIZE = 15) /FIELD = (Gender,POSITION = 17, SIZE = 1) /FIELD = (Age, POSITION = 21, SIZE = 3) /FIELD = (Job, POSITION = 26, SIZE = 10) /FIELD = (Salary, POSITION = 36, SIZE = 6) /REPORT # sorting not req'd /OUTFILE = Unrecognized_People # typo? fraud ?, . . ) /FIELD = (User, POSITION = 01,SIZE = 15) /FIELD = (Check_user, POSITION = 20,SIZE = 12, SET = Good_Users.set[User] DEFAULT="Unknown User") /INCLUDE WHERE Check_user EQ "Unknown User" # /OUTFILE = Invalid_Jobs # Jobs not on approved list /FIELD = (User, POSITION = 01,SIZE = 15) /FIELD = (Job, POSITION = 20,SIZE = 10) /FIELD = (Job_check, POSITION = 35,SIZE = 11,SET = Legitimate_Jobs.set[Job] DEFAULT="No such Job") /INCLUDE WHERE Job_check EQ "No such Job" /OUTFILE = Age_Violations # Age related problems /FIELD= (User, POSITION = 01,SIZE = 15) /FIELD = (Age, POSITION = 20,SIZE = 5) /FIELD = (Job, POSITION = 25,SIZE = 10) /INCLUDE WHERE Age < 18 OR Age > 100 /INCLUDE WHERE Age > 65 AND Job EQ "Pilot " /OUTFILE = Salary_Limits # something with salary /FIELD = (Job, POSITION = 5,SIZE = 10) /FIELD = (User, POSITION = 15,SIZE = 15) /FIELD = (Salary, POSITION = 30, SIZE = 6) /INCLUDE WHERE Job EQ "Clerk " AND Salary GT "100000" /INCLUDE WHERE Job EQ "Guard " AND Salary GT " 45000"

Output Files:

Unrecognized_People

Benny, Jack Unknown User Carson, Johnie Unknown User Gleason, Jackie Unknown User Hoffa, Jimmy Unknown User

Invalid_Jobs

Benny, Jack Comedian No such Job Carson, Johnie Sculpter No such Job Gleason, Jackie Hustler No such Job Heston, Charles Actor No such Job James, Henry Groom No such Job Hoffa, Jimmy Pilot No such Job

Age_Violations

Einstein, Al 124 Genius Green, Harry 102 Guard Hoffa, Jimmy 66 Pilot White, Mary 06 Clerk

Salary_Limits

Clerk Grey, Damson 101000 Guard Perry, Jane 98772